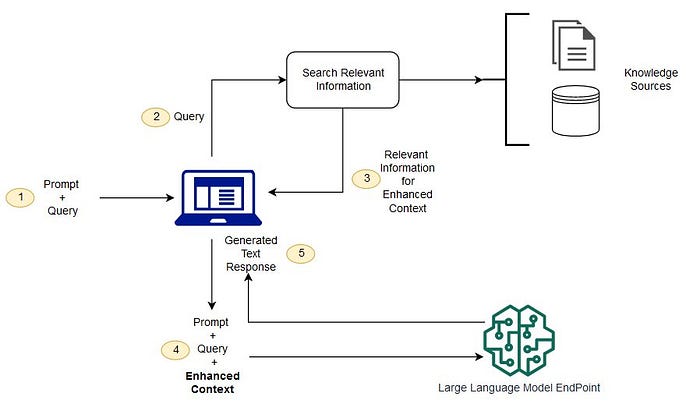

In a previous exploration, I delved into creating a Retrieval-Augmented-Generation (RAG) demo, utilising Google’s gemma model, Hugging Face, and Meta’s FAISS, all within a Python notebook. This demonstration showcased the potential to build a locally-run, RAG-powered application.

This article aims to advance that groundwork by deploying the model and RAG functionality via FastAPI, with a subsequent consumption of the API through a straightforward ReactJS frontend. A notable enhancement in this iteration is the integration of the open-source Mistral 7b model and the Chroma vector database. The Mistral 7b model is acclaimed for its optimal balance between size and performance, surpassing the Llama 2 13B model across benchmarks and matching the prowess of Google’s gemma model. Continue here