Monday, June 12, 2023

Database replication using Confluent (Kafka) and Debezium

Friday, May 5, 2023

Migrating IBM DB2 to Google Bigtable and achieving FIPS compliance encryption using Java custom encryption library

This is about a project I undetook recently, the purpose was to migrate large volume of on-prem db2 data to google bigtable using DataProc, a spark based solution on Google Cloud, a few things that are notable from the project:

1. I have to use SCALA to develop the solution due to the fact that the encryption libary was developed in Java and althoguh it has interoperativity to Python, it does have lot of limitation which stoped me from using Python... on the other hand, SCALA and JAVA just work together seamlessly.

2. for FIPS compliance, I have to use bouncecastle library, which introduces issues in managing dependencies, "dependency hell" as some named it, at then end I have to use mevan to manage dependencies and shade sbt due to the complexity.

3. I used hbase-spark connector for talking to bigtable, Since I am using spark 3, I have to complied the connector libaray manually, see https://github.com/apache/hbase-connectors/tree/master/spark

(this project was done about a year ago)

Handling Large Messages With Apache Kafka

While working on handling large messages with Kafka, I came across a few useful reference articles, bookmarking here for anyone who needs them:

https://dzone.com/articles/processing-large-messages-with-apache-kafka

https://www.morling.dev/blog/single-message-transforms-swiss-army-knife-of-kafka-connect/

https://www.kai-waehner.de/blog/2020/08/07/apache-kafka-handling-large-messages-and-files-for-image-video-audio-processing/

https://docs.confluent.io/cloud/current/connectors/single-message-transforms.html#cc-single-message-transforms-limitations

Tuesday, April 4, 2023

Object Tracking Demo

Elevating LLM Deployment with FastAPI and React: A Step-By-Step Guide

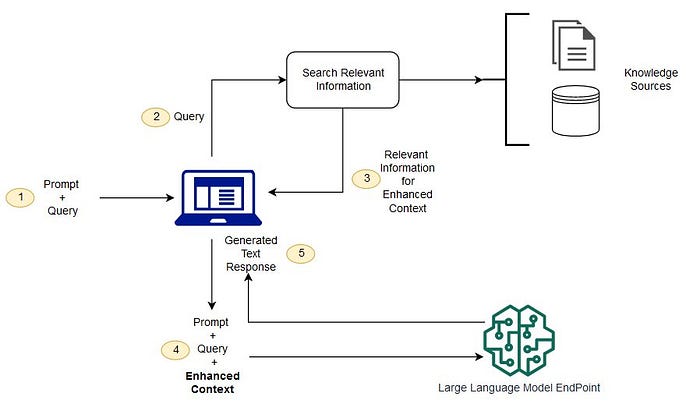

In a previous exploration , I delved into creating a Retrieval-Augmented-Generation (RAG) demo, utilising Google’s gemma model, Hugging ...

-

Error 15401: Windows NT user or group '%s' not found. Check the name again. SELECT name FROM syslogins WHERE sid = SUSER_SID ('Y...

-

start /wait D:\Servers\setup.exe /qn VS=[VIRTUALSERVER] INSTANCENAME=[MSSQLSERVER] REINSTALL=SQL_Engine REBUILDDATABASE=1 ADMINPASSWORD=[CUR...

-

Finally, it is time. E4SE 811 and eBackoffice 736 will replace our current 810b/735a environment after staying so many years. Just got the n...